White vs. black: YouTube accidentally blocks chess player for “racist language”

The words “white” and “black” are to chess players what “blue” and “gray” are to weathermen. Still, they pose a challenge to algorithms designed to detect racism online, according to new research. Previously, YouTube probably took a chess player offline after the AI algorithm incorrectly labeled certain terms as ‘hate speech’.

Last June, the popular YouTube channel of Croatian chess vlogger Antonio Radi, or “agadmator,” was blocked for “harmful and dangerous” content. The chess player was able to return to the platform within 24 hours, but a statement from YouTube about the reason for the ban was not forthcoming.

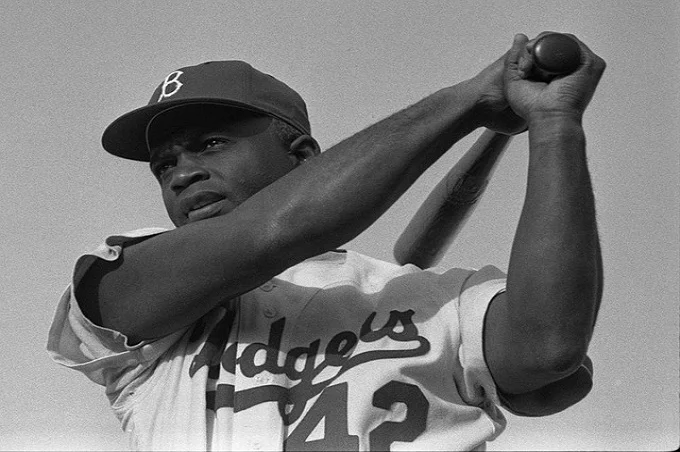

The case aroused the interest of investigators, who mainly questioned the timing of the incident. The channel went offline after an interview with Hikaru Nakamura, a multiple chess champion and the youngest American ever to receive Grandmaster’s title. And – as usual in the chess world – a lot of ‘black and white talk’ was involved.

‘black’, ‘white’ and ‘attack’

“We don’t know what tools YouTube uses, but if they rely on artificial intelligence (AI) to detect racist language, these kinds of accidents can easily happen,” said KhudaBukhsh, a computer scientist at Carnegie Melon’s Language Technologies Institute.

To confirm this suspicion, the researchers screened a total of more than 680,000 comments from five popular chess-related YouTube channels. Nearly a thousand of them were labeled ‘hate speech’ by an algorithm. However, after further research, it turned out that more than 80 percent of the comments had no racist orientation at all. However, they were full of words such as ‘black’, ‘white’, ‘attack’, and ‘threat’.

The accuracy of this kind of software depends on the samples it gets, KhudaBukhsh said. For example, he recalled an exercise he received as a student where the goal was to identify “lazy dogs” and “active dogs” in a set of photos.

Many of the training photos of active dogs showed vast expanses of grass, as running dogs were often in the distance. As a result, the program sometimes identified photos with large amounts of grass as examples of active dogs, even when there were no dogs in the photos.

In addition to human moderators, YouTube also uses AI algorithms to detect banned content, but if they are fed too few or incorrect samples, they become ‘oversensitive’ and block the wrong content. The researchers, therefore, ask to include chess language in the algorithm to prevent future misunderstandings.