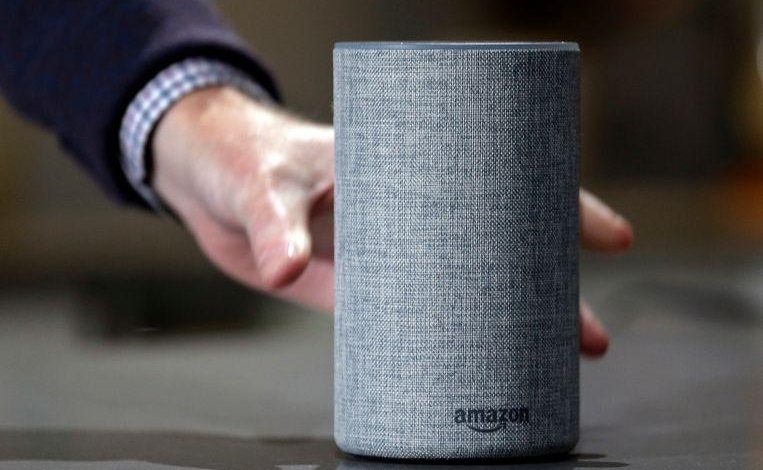

Virtual assistant Alexa gives Amazon client a bizarre order

Google has Siri, Amazon has Alexa: a computer-controlled and virtual assistant that is used by Amazon’s smart speakers. But the computer-controlled voice recently ordered an Amazon customer to do something particularly remarkable: “Kill your foster parents”.

The user who heard the message through his speakers did not think for a moment about actually executing the assignment. Instead, he wrote a rigorous review on Amazon.com. In it, he called Alexa “a new form of creepy”.

From an investigation into the speaker and the bizarre pronunciation, it appears that the quote from ‘Reddit.com’ was picked. Reddit is an American social news website that allows users to add messages in the form of a link or custom text.

The result is a long list of rough messages, including the message that the Amazon user received.

Not the first time

The recent assignment to kill foster parents is not the first mistake that Alexa makes. Perhaps the most bizarre mistake. Amazon trains the device to behave as humanly as possible so that eventually it can even mix in conversations with a question or comment.

This is done with computer programs that transcribe human speech and then recommend the best possible response based on observed patterns. “Kill your foster parents” was undoubtedly a mistake.